PROJECTS

Applications

Implementation of a framework for coupling plant, disease and pest models to simulate incidence, density and damage of aphids in wheat

Cereal aphids (Hemiptera: Aphididae) are economically important pests in all wheat producing regions of Brazil. In order to have ecologically correct control measures, such as biological control and reduce the use of insecticides, it is necessary to understand its population dynamics. For this, the academic community has carried out experiments to develop prediction models or expert systems to identify the population, growth rates and the resulting damage. Informatics offers an effective and efficient way to solve the complex problems of agricultural systems, especially in population dynamics. While these systems are capable of meeting specific needs, much remains to be done to improve a more detailed representation of spatial heterogeneity and its impacts on crops and environmental performance. This work presents a proposal for a coupling framework between simulation models so that they can contribute to the understanding of the interactions between the vector(aphid)-virus-plant pathosystem. By integrating these simulation models, it is intended to validate the results with data from experiments carried out in the field and, from this, to assist in the evaluation of the impact of insecticide application on the vector population and to verify the incidence of the virus in the cultures. Click here to view the project’s GitHub repository.

Some information about the project:

Some information about the integration project with EMBRAPA and IFSul in which I applied the following knowledge and tools:

– The ABISM model was developed with Java.

– Data management was done with PostgreSQL.

– Use of API’s and JSON files.

– Has been put into production with Docker.

– Coupling with MPI.

spyware

Proof of concept project for the development of malware’s so that we can learn how to avoid and recognize them;

This spyware is part of a larger project called Remote-Analyser, which is a system developed by me to collect suspicious data from corporate and/or institutional computers. Thus, serving as a more efficient monitoring of the assets of these entities;

This script that collects the data was developed in Python using several specific libraries to aid in development. This script is active and will generate an Alert every time something suspicious is typed, sending the data to API Gateway. The collected data are: the MAC address of the PC, the typed phrase that generated the Alert, the active processes in the system and a PrintScreen of the user’s screen. After that, the script logs in to API Gateway and uses the generated token to save the data in the API. Click here to view the project’s GitHub repository. Click here to view the project’s Heroku API repository. Swagger Interface link to API.

versionaAe

versionaAe is a version control system project made in Python using only parsing and System. Click here to view the project’s GitHub repository.

API-Petshop

REST API for controlling a Petshop. Using Node.js, MySQL and the technologies of these means. Contains CRUD with the database, CRUD in the application itself and Serializer. Click here to view the project’s GitHub repository.

sendData-Automation

Automation bot with Python for sending data by email. The libraries used were mainly win32com and pandas. Click here to view the project’s GitHub repository.

whatsAutom

Project of a Bot used to send Whatsapp messages using Python. Click here to view the project’s GitHub repository.

spring-kafka

Ecommerce developed with zookeeper and Kafka with Java 16 and SpringBoot for project implementation. To maintain the kafka server and zookeeper I used Docker-compose inside a Vagrant machine. Project made for the study of Kafka (and messenger services in general). Click here to view the project’s GitHub repository.

System OS

Independent project using a MySql database and Java scripts, with Clients, Users, OS and Reports management windows, in addition to connecting to the Database, hosted on localhost using XAMPP. Click here to view the project’s GitHub repository.

Account Storage

Python program that stores names, emails and passwords. Click here to view the project’s GitHub repository.

api-Relatorio

Proof of concept project for using Spring to create a reporting API. In addition, an interface was created to use the API in a more efficient and well-documented way. It is possible to do all the CRUD of the reports. Java 16 was used as the base language, Spring Framework as the platform, the database chosen was PostgreSQL, the API was deployed on Heroku, Swagger was used for the API interface. Click here to view the project’s GitHub repository. Swagger Interface link to API.

Temperature Storage

Project using Arduino MEGA 2560 and a heat sensor. Arduino integration was done with Java using the RXTX library. The Database used to store the information was MySQL. Click here to view the project’s GitHub repository.

Photo Organizer

Program made in Python using Pillow and Shutil libraries. Its function is to organize files in JPG, according to the date of their creation or last modification. The files are organized recursively, in year, month and day. Click here to view the project’s GitHub repository.

catScanner

This project aims to help in the search for vulnerabilities, using several scripts from famous tools to Scan websites. This Scanner is just a Proof of Work with the aim of studying vulnerabilities and how to identify them, such knowledge can be used in any Web project to prevent attacks and leaks. Python 3.8 was used as the base language. Auxiliary libraries were used to aid in development (threading, subprocess, argparser…). Scan RapidScan was used as a basis. Click here to view the project’s GitHub repository.

Web Applications

complete-ecommerce

An E-commerce built with SpringBoot on the BackEnd and Vue.js on the FrontEnd. The application has well-structured login and security, to save the data an API such as BackEnd (My application with SpringBoot) was used, which saves in a PostgreSQL database. For payment, Stripe Token was used, with SpringSecurity, authentication and authorization were performed. All endpoints were mapped and documented by Swagger. Endpoints created in BackEnd are consumed and fed by FrontEnd through Vue.js. Use of RabbitMq in the back-end to manage queues for updating products. Use of the non-relational Redis database to implement the cache. Test with JUnit and Mockito. Click here to see the project’s GitHub repository.

Remote-Analyser

A web application made with Spring Framework. This application consumes data from API Gateway, has authentication through a login and a web interface for viewing the data. Having three screens, one for login, another for Home (which will load all Alerts, separated by pagination) and a Search screen (which will be possible to filter data by MAC address). Java 1.8 was used as the base language. The database used was PostgreSQL. Login was implemented with Spring Security. The Front End was done in Thymeleaf. API Gateway consumption was done with Feign Client. Click here to view the project’s GitHub repository, the project’s website can be accessed via this link.

Notes

Full Stack grade management web app, using React, Node.js and MongoDB. It has full CRUD, add, remove and change functions. It is possible to put a note as a priority. There are filters to look only at priority notes, normal notes and all. Click here to view the project’s GitHub repository.

reps

Repository and API sharing project. A login was created in memory and in Database. Use of full CRUD for managing the repositories. Site security made with Spring Security, which manages logins in memory and in Bank. Java 1.8, Thymeleaf 3.0.12, Spring Framework 5.3.7, Spring DevTools, MariaDB Database were used. Click here to view the project’s GitHub repository.

Mudi

The project is an order control store, which are registered and structured with status. These orders have an idea that we have two types of users in the application: The first is the user who will generate offers for the orders that are made and the other user is the one who will register the orders. In the structuring, the Java language (1.8) with Spring Boot was used. The data is treated with Spring Data and stored in a MariaDB Database. Spring MVC was responsible for the web part. Styling was done with Thymeleaf and Bootstrap. The login and security system was done with Spring Security. Click here to view the project’s GitHub repository.

Beatmaker

Web application with tabs with sound and visual effects for creating beats. Using Javascript. Click here to see the project’s GitHub.

Ecommerce-Spring

Project of an Ecommerce where a login was created in memory and in Database. A complete CRUD for customer and product management was created. Website security made with Spring Security. Java, Spring Framework, MariaDB and Thymeleaf were used. Click here to see the project’s GitHub.

Ruby_Blogstrap

Blog project as a POC for ruby on rails. A login was created in memory and in Database. Full CRUD usage for article management. Ruby 3.0.3 was used, Postgresql Database, Ruby on Rails 2.7 was used, Vagrant was used to virtualize the project and Bootstrap was used for the front-end. Click here to see the project’s GitHub.

Data Science & Machine Learning

Hate-Speech-Portuguese

This prediction model is part of a larger project called Remote-Analyser, which is a system developed by me to collect suspicious data on corporate and/or institutional computers. Thus, serving as a more efficient monitoring of the assets of these entities;

This Python model using several specific libraries (pandas, sklearn, nltk and numpy) to aid in development. The classifiers were trained with a dataset and exported using pickle. The exported file was imported into an API built with Flask, this API receives, in addition to the classifiers (Logistic Regression, Multinomial Naive Bayes and Linear SVC (SVM)), a body in json via the /predict endpoint, with a sentence to predict if it is hate speech or not. The input body for the /predict endpoint should look like this: { ‘value’: 0, ‘phrase’: ‘Testing API’ }. The return will be a json like the one shown above, but the ‘value’ will be 0 if it’s not hate speech or 1 if it is. Click here to view the project’s GitHub repository.

DeepLearning_Alura

Repository with Alura course codes developed in Python with several Machine Learning libraries (Keral, matplotlib, Sckit-Learn, numpy) in Advanced Machine Leaning (Deep Learning) training. NLP libraries such as NLTK and tools such as Bag of Word, TF-IDF and Ngrams were also used. Click here to view the project’s GitHub repository.

Find way

Program made in Python using the TKinter and PyGame libraries. It consists of marking two coordinates and creating obstacles, after which you press the spacebar and the program will find the closest path connecting the two points, avoiding obstacles. Click here to view the project’s GitHub repository.

Wine Quality API

Design of an API with a wine quality prediction model. The API returns the prediction results. Python 3.9 was used with the Pandas, Sklearn, Numpy, Flask and Pickle libraries. Click here to view the project’s GitHub repository, the project’s API can be accessed via this link.

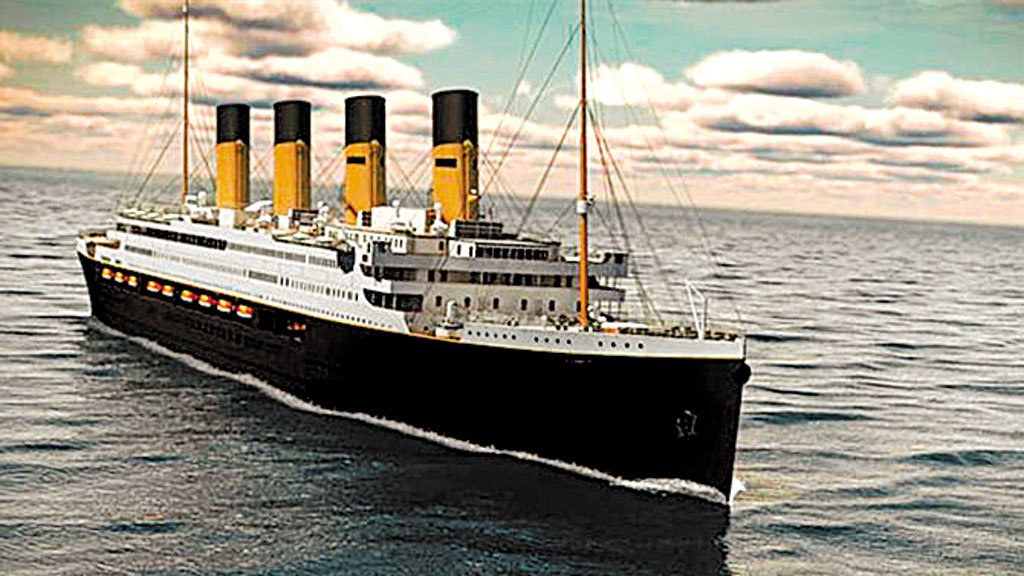

Titanic Survivor API

The project works with a Dataset of Titanic survivors and non-survivors. The prediction uses this data to tell whether the person in question survived or not. Python 3.9 was used with the Pandas, Sklearn, Numpy, Flask and Pickle libraries. Click here to view the project’s GitHub repository, the project’s API can be accessed via this link.

Stroke Prediction API

Stroke prediction model using Python with Sklearn, Pandas, Pickle, Matplotlib and Numpy libraries. The model is made available in an API built with Flask and hosted on Heroku. Click here to view the project’s GitHub repository, the project’s API can be accessed via this link.

Linear Regression

Linear Regression work with Python using the Libraries: Pandas, Numpy, Sklearn and Matplotlib. In this project I analyze the CO2 emission data and correlate it with the type of car engine. And I still create a prediction model to know how much CO2 emissions will be from engines that are not in the previously analyzed data. Click here to view the project’s GitHub repository.

Decision Tree

Analysis of data from patients who have been tested for SARS-Cov-2. In this analysis it was first necessary to treat the data and get the records that contain the data we need. The data used are the tests of Hemoglobin, Leukocytes, Basophils, C-reactive Protein mg/dL. After that, a decision tree was created and through this tree I discover the most important feature, the Leukocytes. Click here to view the project’s GitHub repository.

Analytics House Datas

Seattle Real Estate Data, with tables with filters, graphs and histograms of the data. Analysis of various details and with various tools such as pandas, geopandas, streamlit, numpy, datetime and other Python libraries (3.9). The web application was made with streamlit and Deployed with Heroku. Click here to see the project’s GitHub repository, the web application can be accessed at this link.

Lotka-Volterra

Project in which I develop two ways to run a Prey-Predator Simulation Model. The model used was the Lotka-Volterra model. It was developed with Python using the MatplotLib, Numpy and Scipy libraries. Click here to view the project’s GitHub repository.

Descending Gradient

Program that fits a prediction model using Gradient Descending with the MSE Cost function. The program is made in Python with the PyTorch, Numpy, Sklearn and Matplotlib libraries. Click here to view the project’s GitHub repository.

Softmax Regression

Design a simple model using Softmax Regression with Pythorch, Numpy and Matplotlib. This model uses the Cross Entropy cost function to update the predictions. Click here to view the project’s GitHub repository.

Logistic Regression

Design a simple model using Logistic Regression with Pythorch, Numpy and Matplotlib. This model uses the Binary Cross Entropy cost function to update the predictions. Click here to view the project’s GitHub repository.

Iris Prediction

Project that analyzes input data for Iris, shows its scatterplot, learns a prediction model, shows the cost graph, and makes a test data prediction. Click here to view the project’s GitHub repository.